AI Training

March 12, 2025

Article by

Mindrift Team

Artificial intelligence is only as good as the data it’s trained on.

It takes more than engineers and data scientists to build AI models—they need AI Trainers to contribute their expertise, writing skills, and in-depth knowledge.

AI Trainers are the backbone of the AI revolution, working hard to help LLMs (large language models) go beyond simple mimicry. They’re helping AI models become more helpful, ethical, and trustworthy, with the ability to hold natural conversations.

Sounds interesting? Let’s break down the AI Trainer roles at Mindrift and dive into how you can get involved!

What does an AI Trainer do?

To say that AI Trainers contribute their efforts to advancing AI is true … but it’s also pretty vague. Here’s what an AI Trainer’s actual day might look like.

Morning:

Log into the platform, read messages, and check tasks.

Spend a few hours creating high-quality prompts, having multi-turn conversations, and rating AI model responses based on a rubric.

Afternoon:

Take a long leisurely lunch!

Dedicate some time to studying, working, or enjoying hobbies.

Log back in to finish up some tasks from the morning.

Evening:

Jump onto Mindrift’s Discord and network with other AI Trainers.

Check emails to find an invitation for a new AI training project.

Dedicate some time to reviewing project guidelines and completing qualification quizzes.

Of course, no two days will look the same, but that’s the beauty of this freelance, flexible opportunity. Whether you like to start your day at 6 a.m. or 6 p.m., you can design your own schedule—one that fits your lifestyle.

Training AI at Mindrift

Our collective of freelancers is made up of individuals with diverse educational, professional, and cultural backgrounds. Mindrift's AI Trainers are:

New graduates seeking flexible part-time remote opportunities

Stay-at-home professionals leveraging their expertise to earn extra income

Specialists taking career breaks seeking meaningful and intellectually stimulating activities

Non-technical professionals who want to contribute to AI projects and shape the future of education and communication

Unique roles for everyone

Whether you're a skilled copywriter or have experience in STEM, coding, medicine, natural sciences, humanities, or other fields, Mindrift offers opportunities tailored to your interests:

AI Trainer – Domain Expert: Suited for professionals from a wide range of fields including physicists, chemists, computer scientists, historians, lawyers and more.

AI Trainer – Writer: Ideal for talented copywriters, journalists, linguists, and other wordsmiths seeking diverse topics and projects.

AI Trainer – Editor: Great for editors and language-savvy folks who are interested in flexible projects and are ready to work on content used for AI training.

Some roles require a combination of Bachelor's degree, Master's degree, and specific soft skills but technical expertise is usually not a prerequisite. Head over to our AI Tutor Roles page to learn more about each category and see where you fit in!

Work anywhere, anytime

We have freelancers from all over the world, working in different time zones and countries. All you need is a laptop, a reliable internet connection, access to the Mindrift platform and the desire to advance AI. The freedom to submit work from anywhere, anytime is yours—as long as tasks are available.

No coding required

The AI Trainer role doesn't require a machine learning or data analytics degree, or expertise in AI technology. Familiarity with AI models, training data, and algorithms is not a must-have either. However, continuous learning is always welcome!

AI Trainers at Mindrift are supported by dedicated QAs (quality assurance) who create a comfortable space for project-related communication, feedback, and mutual support. We provide detailed guidelines, onboarding materials, and access to a helpful community.

How the platform works

Mindrift is not a job, it’s a flexible remote opportunity designed to connect freelancers interested in training AI models and clients working in the AI space to collaborate on unique projects. Let’s take a closer look at how it all works.

The application process

The first step is heading over to our Open Expert Roles page. Once you choose the domain that fits you best, you’ll be taken to the application.

Read the role requirements and description carefully! We take attention to detail seriously so if you don’t meet the requirements, please check if another role might suit you better before applying.

Once you fill in the application, that’s it! If we think you’re a good fit, someone from our recruitment team will get in touch with next steps. This might require completing some knowledge and language quizzes or sitting down for a quick interview.

Talent pool vs. Immediate projects

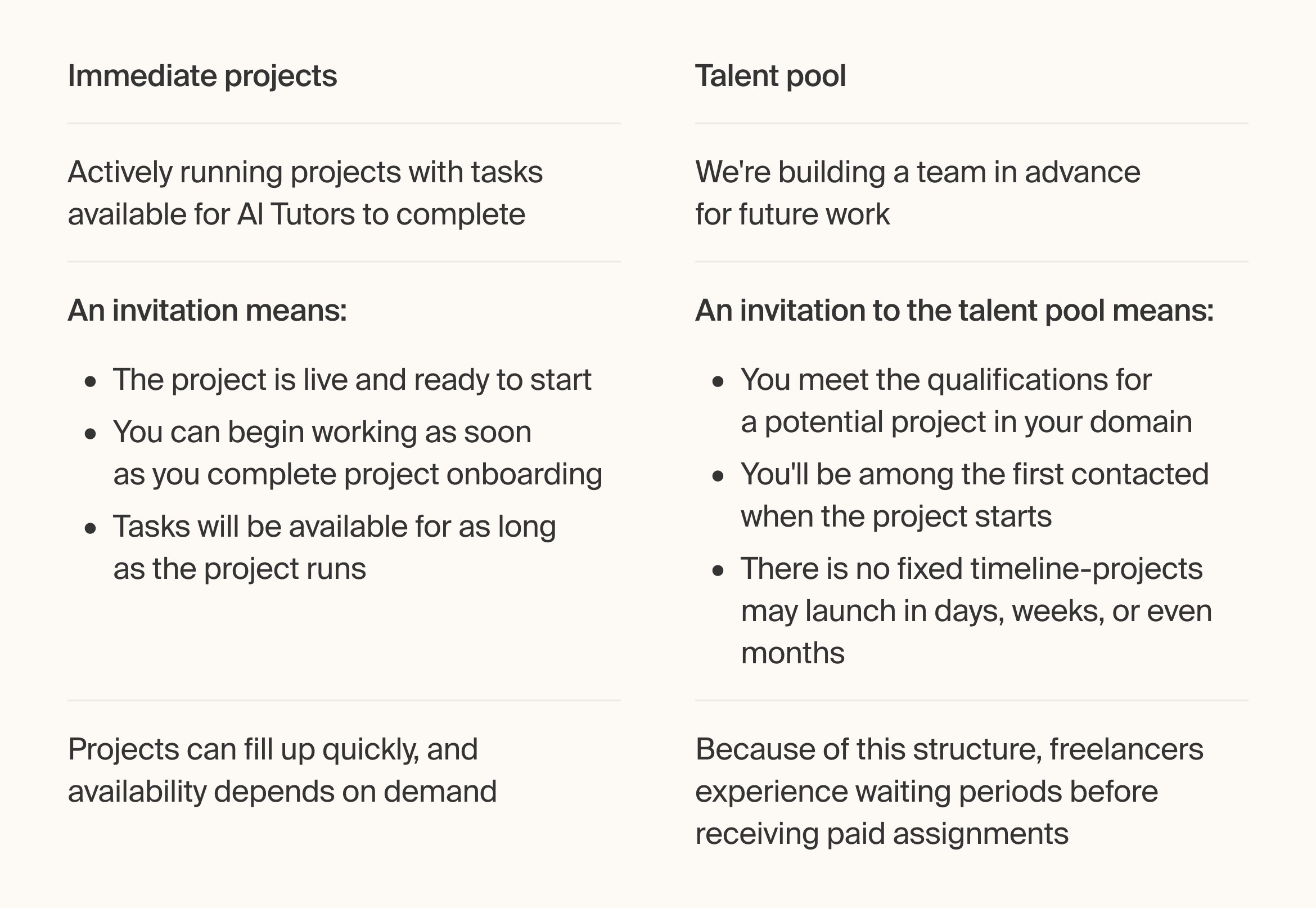

One major distinction our AI Trainers (and applicants!) need to understand is the difference between our talent pool invitation and an immediate project invitation.

Our role is to connect our clients with the right experts for their AI projects. Because we work with multiple clients who often have multiple projects, we have a need for a diverse group of freelancers with expertise in different domains.

But our clients projects have beginnings, ends, and pilot phases. Some projects are very small and specialized, others are broad and general. A project might run several months or only a few weeks. Our solution for this quickly changing demand is the talent pool!

The talent pool is a collection of freelancers, also known as our domain experts, who are registered on the platform but not for a specific project. Once a project that meets their expertise arises, we invite them to join. Projects aren’t guaranteed and participation is elective.

This is very different from immediate project invitations, which come up when we need to fill roles for a new project with specific requirements. We might not have any freelancers with these particular skills or our talent pool might be too small for the client’s needs, so we advertise for experts with these skillsets.

Freelancers invited to these projects will go through the qualification process, onboarding stage, and then into the project work. Once the project concludes, they’ll be moved to the talent pool until another suitable project comes up.

All that to say—projects aren’t guaranteed and might not come up right away, but rest assured that we’re always trying to fit the best fit for our freelancers and clients!

Join the Mindrift community

Our community is filled with experts from every walk of life working toward the same goal: making AI models safer, smarter, and better for everyone. Think you have what it takes to help?

“I highly recommend this role to someone who is open-minded to upcoming changes, passionate about technology, brave enough to embrace uncertainties, patient with training, and generous with sharing knowledge.”

Chloe Zhang, QA at Mindrift

Ready to join the Mindrift community? Check out our open roles and talent pools to get started.

Explore AI opportunities in your field

Browse domains, apply, and join our talent pool. Get paid when projects in your expertise arise.

Article by

Mindrift Team